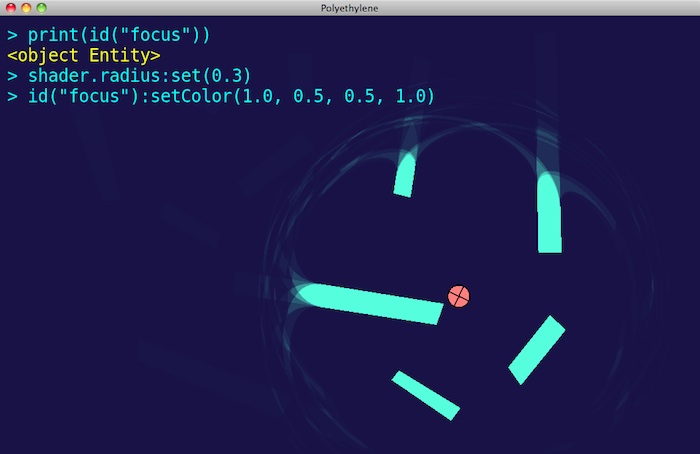

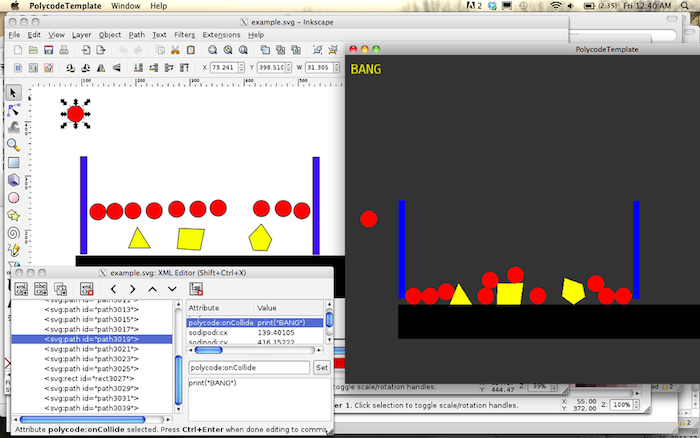

No Compiler, part 3: A second, tiny language embedded inside Lua

Saturday, April 16th, 2016In the last couple weeks I’ve been chasing this weird daydream of writing scripts that directly talk to the LLVM compiler backend library, thus making software without a compiler frontend. Each script would be its own tiny frontend. My plan was this would let me jump directly into the "interesting bits" of programming language development. I wrote a couple of blog posts about this project, but so far those posts have only been about me learning to use LLVM. I’ve sort of got a handle on that now, so it’s time for next steps.

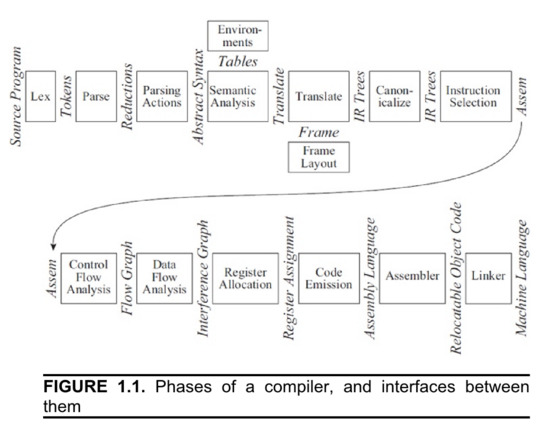

Here’s what "next steps" means to me: Normally, if you were developing a programming language with LLVM, you’d design an "abstract syntax tree" that roughly captures the semantics of the language you are designing. You’d design a syntax that reduces to your AST, and then you’d write code to reduce your AST to the IR ("intermediate representation") that the LLVM library consumes. What I imagine is that in my not-a-compiler experiments, I’d still have an AST, just my program would be directly constructing the AST as a data structure in memory instead of building it from a textual representation (or in other words: a "programming language"). This would let me try out different programming language semantics without having to pick a syntax. Once I’ve settled on a semantics, I could grow a syntax around it.

Or… maybe not? The thing that makes this idea seem "weird" and not just developing a language compiler piecemeal is the thought that maybe I could never bother with the syntax and just do entire projects by writing these scripts that generate ASTs as data structures. In the Haskell world they have this very specific meaning to the word "DSL"— normally that’s short for "domain specific language" and refers to writing a little language as a helper for some task, but what Haskell users like to do is design little sub-languages entirely out of idiomatic Haskell. They inject a few functions and operators (in Haskell also functions) into a namespace and call only those, and because Haskell has flexible syntax and a weird compiler this is as good as writing a whole new language compiler. I think what I want to do is create a library that effectively constructs that kind of DSL within Lua. I’d be writing software and my "programming language" would be an idiomatic Lua that, when executed by the Lua interpreter, generates my program’s executable.

Housekeeping

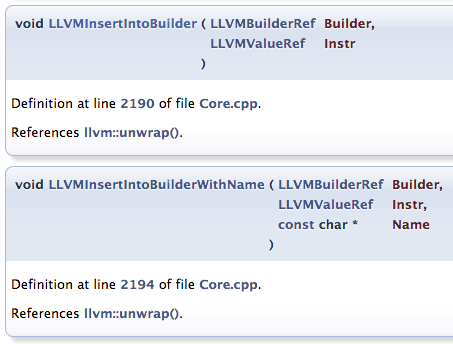

What I’ve been doing so far is something a little similar— instead of designing an AST and constructing it in memory, I treat the in-memory representation of LLVM IR as if it were my "AST" and construct that in memory. This works okay, but it is clumsy. It’s clumsy first off because it is a clumsy thing to be doing— LLVM IR is basically assembly— and second off because my setup for calling the builder functions is a little clumsy. Whatever I build next will be based on the LLVM wrapper I’ve been using so far, so before I move on I should make my LLVM wrapper at least nice to use.

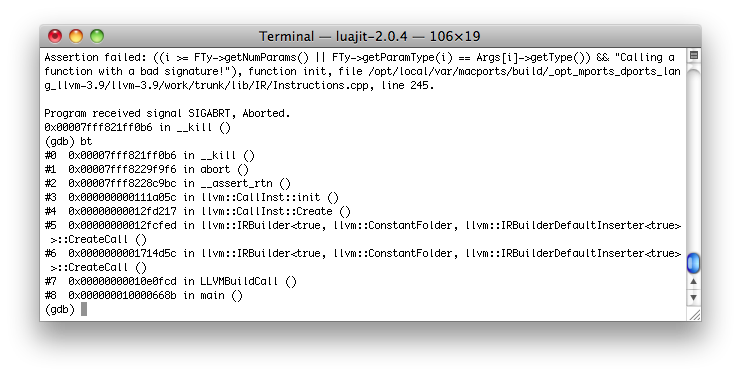

First things first: The version of LLVM I’ve been using doesn’t exist. I downloaded LLVM from MacPorts, and the newest version they had listed was 3.9, so I installed that. It turns out 3.9 hasn’t been released yet and I’m using code from LLVM’s development branch. (Jeremy is very thorough.) This is pretty bad for my purposes because apparently 3.9 still has significant API changes planned before official release, so if I check out this code on a new computer in two years and try to build it it won’t work. No good. Easy to fix though: I download a copy of LLVM 3.8, repeat the process of handmaking Lua bindings from my first blog post, and rename the 3.9 bindings to indicate it’s a particular beta version. When running the script you now have a choice of LLVM wrappers, 3_8 or 3_9-r259341.

Second off: again, these wrappers are clumsy. Here’s a stretch of code from my last blog post:

local valueDeref = LLVM.LLVMBuildLoad(builder.llvm.builder, value, "ValueDeref") local divisorDeref = LLVM.LLVMBuildLoad(builder.llvm.builder, divisor, "DivisorDeref") -- printf divisor local printfArgs = ffi.new("LLVMValueRef [2]") printfArgs[0] = LLVM.LLVMBuildGlobalStringPtr(builder.llvm.builder, "%d ", "tmpstring") printfArgs[1] = divisorDeref LLVM.LLVMBuildCall(builder.llvm.builder, printfFn, printfArgs, 2, "tmpcall");

There is a bunch of repetition and boilerplate here. LLVM.LLVM and builder.llvm.builder appear over and over. A lot of the vertical space is taken up by the ffi.new idiom, which I have to use over and over because the C functions accept C arrays, not Lua lists, and this ffi.new thing is how you make a C array in Lua. This all makes the code hard to read and therefore bugs hard to spot. There are some things I can do about this.

-

LLVM.LLVMis annoyingI’m using LLVM through the C interface. Because C has no namespacing, LLVM-C attempts to be a good citizen by prefixing every single symbol it exports (functions, structures, enums, everything) with "LLVM". Because Lua does have namespacing, it quarantines all the imported symbols inside the LLVM table. This means "LLVM.LLVM" is repeated endlessly throughout my code, like a constant background static.

I can fix this using weird metatable magic. In Lua (unless you’re using the C FFI) the sole data structure is the table, which is a built-in dictionary backed by a hashtable or something. Object-like capabilities are offered using something called the "metatable"; each table may have an invisibly associated partner table containing a set of standardized functions, which get invoked when the interpreter hits an exceptional case while working with the real table. Functions that can be in the metatable include

__index, which is called if a key is not found, or__newindex, which is called before adding a new key binding, or__add, which is called if you attempt to use arithmetic+on a table (normally illegal). The normal use of metatables is to emulate object-oriented programming; you can easily design a class system or prototype system and emulate it using the metatables. At core though an empty table with a custom metatable is basically a magical thing where a custom function gets called anytime you read from it, write to it, or do arithmetic on it.Here’s what I’m going to use this for:

-- Pass this one of the metatables below function emptyWithMetatable(metatable) local table = {} setmetatable(table, metatable) return table end -- Transforms table.someCall into LLVM.LLVMSomeCall llvmMetatable = { __index = function(_, key) return LLVM["LLVM" .. capitalize(key)] end } llvm = emptyWithMetatable(llvmMetatable)The

llvmobject is empty, so every attempt to read from it invokes the__indexfunction; the__indexfunction capitalizes the lookup key and prepends "LLVM". In other words, sayingllvm.int32Typeis now equivalent to sayingLLVM.LLVMInt32Type.Actually, I can do a little better than this— the code above means on every single LLVM function call we’ll be doing a string manipulation on the name, which is less efficient than it could be. But I can add a higher-order function and:

-- Wrap an __index function in this and it will cache the results in the table, -- such that your __index function will only be called once per unique index. local function cacheAndReturn(fn) return function(table, key) local value = fn(table, key) table[key] = value return value end end -- Transforms table.someCall into LLVM.LLVMSomeCall llvmMetatable = { __index = cacheAndReturn(function(_, key) return LLVM["LLVM" .. capitalize(key)] end) } llvm = emptyWithMetatable(llvmMetatable)That might be a little harder to read, but now every time I look up a key in my magic

llvmtable, the metatable will cache the result inllvm. On the next attempt to read that key, Lua will just find the key,__indexwill not be called, and no function call or string manipulation will occur. -

Repetitive first argument

The LLVM library was designed to be object-oriented; most functions act "on" an object, such as a "module" or a "builder". Again I am using the C interface, where the wrapper authors dealt with object methods by just always passing the relevant object as the first argument. In my scripts however I tend to create one module or one builder and use them the entire program, so passing

builder.llvm.builderon every single function— as happens in the code snippet above— gets annoying.Again I can make this look a little prettier with metatables:

-- Transforms table.key(input) into target.key(arg, input) -- In other words, this wraps a table while prebinding all function calls with one argument function bind1Metatable(target, arg) return { __index = cacheAndReturn(function(table, key) local func = target[key] return function(...) return func(arg, ...) end end) } end builder = emptyWithMetatable(bind1Metatable(llvm, llvm.createBuilder()))I now have an object

builderwhich holds an inner LLVM builder object, such that sayingbuilder.buildBr(loop)is equivalent to sayingLLVM.LLVMBuildBR(innerBuilder, loop). Again I’m using the cache wrapper, so every time I access a new function frombuilderit has to construct a prebound wrapper, but on future lookups it just regurgitates the wrapper from before. (Note that as a tiny, probably-inconsequential optimization I’m calculating the value offuncoutside of the constructed function, so that gets calculated only once, too). -

ffi.newis annoyingThis one doesn’t even require metatables to work around:

function cArray(type, values) local arrayType = string.format("%s [%d]", type, #values) local array = ffi.new(arrayType) for i,v in ipairs(values) do array[i-1] = v end return array endWith this helper function,

cArray("int", {1,2,3})gets me a C FFI array containing (1,2,3). Simple. Notice the index garbage since Lua is 1-indexed.Since I will almost always be using the types

LLVMTypeRefandLLVMValueRef, I can simplify this a little further even:typeArray = func.bind1(cArray, "LLVMTypeRef") -- Uses Penlight 'func' library valueArray = func.bind1(cArray, "LLVMValueRef")

With these helpers, my snippet from above now looks like:

local valueDeref = builder.buildLoad(value, "ValueDeref") local divisorDeref = builder.buildLoad(divisor, "DivisorDeref") -- printf divisor local printfArgs = valueArray { builder.buildGlobalStringPtr("%d ", "tmpstring"), divisorDeref } builder.buildCall(printfFn, printfArgs, 2, "tmpcall")

That is way more readable. In the adjusted code, most lines are half the length they were at the end of the last post. By the way, notice me saying valueArray { }; in Lua, if a function name is followed by curly brackets, Lua assumes you were trying to invoke the function with the table inside the { } as a single argument. I will be abusing this heavily later.

Here’s the code so far. Now I get to move on to the fun stuff.

Shadow language [A DSL]

It’s time to design my "DSL" (again: "domain-specific language") for specifying programs. After that last stretch, I’m pretty hyped up on lua metatable magic, so I wanna see how metatables can help me.

With empty tables with custom metatables (…ETCMs?), I have these magical semantic things that I can read from, assign to, treat like numbers, and treat like functions, all using the natural syntax for those things. It sure would be nice if reading variables, assigning fields, adding numbers and calling functions in my language could "look like" actually doing those things in normal syntax. I mean, who wants to write LISP? (LISP users, probably.) I imagine I could set up a world where I can say things like:

variables.x = 3 variables.y = variables.x + 5 functions.print( variables.y )

…where variables and functions are ETCMs; and the objects you get if you read from those tables are ETCMs; and when this code runs it might look like I’m assigning 3 to x, adding 5 to that to get y, and then calling print with the value of y, but I’m not actually doing any of these things. Instead, I’m implicitly calling custom metatable functions that are registering each of these actions as I perform them, and at the end I can extract the record of which metatable functions I called as the specification of my program. The final, real program would "shadow" the fake actions I performed in Lua program.

I decide I’m going to call this DSL "shadow language", and call the magical recording-interactions-only ETCMs you interact with in it "shadow objects", because referring to "the DSL" all the way through this blog post would get really confusing and "ETCM"s might be the worst piece of tech terminology I’ve ever heard.

Instead of trying to write a full spec for the shadow DSL, I decide to write a program in it. I rewrite my number-factoring program from the last blog post:

backend.module("main", function(e) e.fn.printf = e.fn(e.type.int32, {e.type.int8.ptr, e.type.vararg}) e.fn.atoi = e.fn(e.type.int32, {e.type.int8.ptr}) e.fn.printFactors = e.fn(nil, {e.type.int32}, function(e, input) e.var.value = input e.var.divisor = 2 e.while(e.var.divisor > e.var.value, e.block(function(e) e.if(e.var.value % e.var.divisor == 0, e.block(function(e) e.fn.printf:call("%d ", e.var.divisor) e.var.value = e.var.value / e.var.divisor end), e.block(function(e) e.var.divisor = e.var.divisor + 1 end) ) end)) end) e.fn.main = e.fn(e.int32, {e.type.int32, e.type.int32.ptr}, function(e, argc, argv) e.if(argc == 2, e.block(function(e) e.fn.printFactors:call( e.fn.atoi( argv.idx(1) ) ) e.return(0) end), e.block(function(e) e.fn.printf:call("Please enter exactly one number as argument") e.return(1) end) ) end) end)

There’s a few things happening here. I’ll break some of them down:

- The environment: At all times, I’m working off of this "environment" variable

e.econtains a container fieldvar(previouslyvariables), a containerfn(previouslyfunctions), containerstypesandblocks, and some helpers likeifandwhile. Each of the containers can be assigned to, to register or (for mutable things like variables) mutate a named item (a variable, function, type or block label). Containers can also be read from, to get a reference (maybe a forward reference) to a named item. Or containers can be called like a function, to create an item in place (for example notice I saye.fn.printFactors = e.fn(...). - Construction functions: When I ask a container to create a new function, or a new block, I pass in a lua function. This is because "code", in the shadow language, is encoded in a sequence of Lua statements. So if I want to nest a stretch of "code" inside another, I have to control the sequence of execution somehow. I do that by embedding the "inner" code in its own function, which will get passed its own environment, and will run when the DSL library is ready for it to run.

- "

call": One weird thing: In shadow language a function call doesn’t actually call the function but just returns a record of the idea that a function needs to be called to produce a particular value. When I saye.var.x = e.fn.atoi( "3" )in the code above, theatoi( "3" )isn’t a procedure that has an effect, it’s an item in an expression tree. This means if you callede.fn.aoti( "3" )alone on a line it would have literally no effect, since you didn’t do anything that registers that particular expression tree as an instruction that needs to be emitted. So I need some way to tell the shadow language I want a standalone function call to be emitted, and the way I do this is the awkward:callon the handful of void-returning functions I call here. (If this paragraph confused you: This is my fault, not yours. This bit is confusing.)

So: I have a little DSL! Is it a good DSL? Well, the "printFactors" program is a lot shorter in this than it was in the last post, when I was writing out LLVM IR. I don’t really like how the new program looks, though: The e. everywhere is bugging me. That looks to me like boilerplate, and as previously insinuated, I don’t like boilerplate. When I see boilerplate my eyes start glazing over, and when my eyes glaze over I start missing bugs. Luckily this is something I can fix with more weirdo Lua magic! Lua has a ridiculously abusable-looking feature called setfenv(), which lets you take a function and, before you call it, change the global variables which that function perceives as existing. If I plan to use this, I can eliminate e entirely by making it implicit:

backend.module("main", function() fn.printf = fn(type.int32, {type.int8.ptr, type.vararg}) fn.atoi = fn(type.int32, {type.int8.ptr}) fn.printFactors = fn(nil, {type.int32}, function(input) var.value = input var.divisor = 2 do.while(var.divisor > var.value, function() do.if(var.value % var.divisor == 0, function() do.fn.printf("%d ", var.divisor) var.value = var.value / var.divisor end, function() var.divisor = var.divisor + 1 end) end) end) fn.main = fn(int32, {type.int32, type.int32.ptr}, function(argc, argv) do.if(argc == 2, function() do.fn.printFactors( fn.atoi( argv.idx(1) ) ) do.return(0) end, function() do.fn.printf("Please enter exactly one number as argument") do.return(1) end) end) end)

Here the various members of e— fn, var, etc— are forcibly populated into each function’s scope before it’s executed. fn doesn’t exist before builder.module() gets called at the top, and it doesn’t exist afterward. It will only exist in the temporary global scope injected by setfenv(). To limit the number of variables I need to inject, I quarantine some of the helpers like if and while into a do object, implied to be the container for non-expression statements— which, conveniently, gives me a better way of handling that :call thing from before.

And once I have things set up like this, I… actually don’t mind this! This is sort of okay. I can read this code. It’s still a bit cumbersome, and the scattered function()s everywhere are more than a little odd, but I’d rather write this than COBOL. This is a good start.

So: I have now a program written in my little program-specification DSL. Can I get to a point where I can compile it?

Improvisational compiler-stage design

When I was originally daydreaming about the shadow DSL, I think I imagined that each of the metatable calls would translate directly into an LLVM Build invocation. If I said var.x + var.y, for example, that would call a metatable __add somewhere which every time it is invoked would just plain call builder.buildAdd( builder.buildLoad(x, ""), builder.buildLoad(y, "") ) and return the resulting LLVMValueRef. This would have been a bad idea even by the standards of this project. Besides being brittle, it wouldn’t work with the syntax in the "printFactors" sample; if I’m using the LLVM builder I need to alloca the variables first thing when generating the function, but with my current DSL I won’t know what variables a function uses until after I’ve executed the DSL code. I need to do something a little bit smarter. I need to create an AST.

Here, finally, I’m doing something normal that a normal compiler would do. There’s probably best practices for how to design an AST. I don’t know what those are so I decide to wing it. I try to imagine what simple structure could be easily constructed from the shadow DSL above, which could also be easily decomposed into LLVM IR.

I imagine I’ll have a tree made of objects of six types: AstModule, AstFn, AstBody, AstBlock, AstType, AstExpr. "Body" is separate from "Function" since external functions like printf don’t have bodies. And the spec for these objects… well, just writing out a sample and then assuming I can craft a library to fit it later seemed to work okay for the shadow language, so maybe it can work for prototyping the AST classes also. I sit down and write out code instantiating an AST for something like my printFactors program inline:

module = AstModule{name="main"} int32 = AstType{literal=int32Type} int8 = AstType{literal=int8Type} void = AstType{} int8ptr = AstType{ptr=int8} int8ptrptr = AstType{ptr=int8ptr} module.namedFns.printf = AstFn{ret=int32, args={int8ptr}, vararg=true} module.namedFns.atoi = AstFn{ret=int32, args={int8ptr}} module.namedFns.printFactors = AstFn{ret=void, args={int32}, body=AstBody{ vars = { value=int32, divisor=int32 }, namedBlocks={ entry=AstBlock{ exprs={ AstExpr{op='store', var='value', value=AstExpr{op='getParam',idx=0}}, AstExpr{op='store', var='divisor', value=AstExpr{op='literal',value=2}} }, exit='loop' }, loop=AstBlock{ exprs={ AstExpr{op='branch', cond=AstExpr{op='bin', op2='>=', value={ AstExpr{op='load', var='value'}, AstExpr{op='load', var='divisor'} }}, y='modulo', n='done'} } }, modulo=AstBlock{ exprs={ AstExpr{op='branch', cond=AstExpr{op='bin', op2='==', value={ AstExpr{op='bin', op2='%', value={ AstExpr{op='load', var='value'}, AstExpr{op='load', var='divisor'} }}, AstExpr{op='literal', value=0}, }}, y='divide', n='increment'} } }, ...

This… goes on, for quite a while. I’ll spare you pasting the entire thing. It’s actually kind of dreadful. The written-out AST turns out to be almost as long as the LLVM-assembly-written-out-in-lua blob from my last blog post, but is somehow less readable. I guess that’s probably okay— the AST isn’t designed to be human-readable or writable.

The job of my AST classes is going to be to hold the structure of the program and generate LLVM objects from that structure. They’re all going to inherit from an AstBase:

class.AstBase() function AstBase:_init(spec) self.spec = spec end function AstBase:llvm() if not self.cached then self.cached = self:render() end return self.cached end

The constructor here follows a pattern that I use in every single Lua program I write, but I’ve never seen anyone else do; the arguments to the constructor are taken as a structure, which I store as self.spec. Normally in OO programming when you write constructors you take an argument list and then your constructor assigns each positional argument to a named variable in the object. I hate writing these. It’s just the most tedious, unnecessary-feeling thing. So instead in Lua, where I’m allowed to do this, my constructors take a structure full of named fields— you’ll notice in my sample code above, the constructor invocations all use {} brackets instead of ()— and nothing needs to be unpacked. Notice that this means all the constructor arguments are effectively optional.

Ultimately what these AST classes exist for is to convert a spec structure into an LLVM primitive. You pass in the spec to the constructor, and you fetch back an LLVM primitive by calling llvm(). To implement an AST class you only need to implement render(), which llvm() calls (and caches the result of, to guarantee render() only gets called once even if a single object shows up multiple places in the tree).

I try implementing one of the classes— AstType, which I expect will be the simplest:

class.AstType(AstBase) function AstType:_init(spec) self:super(spec) end function AstType:render() if self.spec.literal then return self.spec.literal end if self.spec.ptr then return llvm.pointerType(self.spec.ptr:llvm(), 0) end return llvm.voidType() end

So AstType expects one of three different specs:

{literal=}, with a premade LLVM type object asliteral; in this casellvm()returns the type object.{ptr=}, with anAstTypeasptr; in this casellvm()returns a pointer type of the child type.- Empty

{}, in which case it returns the void type.

(The constructor in this code snippet is unnecessary, by the way, since the default behavior with Penlight classes is to call the super constructor. But it’s nice to have the constructor already pasted in.)

So this is pretty straightforward! I have a program written out as calls to the constructors of these AST classes; I have a class heirarchy; and now I just need to fill in those render() methods and I’ll be able to generate an entire LLVM module by calling llvm() once. Everything I’ve done in this project so far has proceeded really quickly, so I sit down on a Sunday morning and hope I’ll be able to finish implementing the AST classes that day.

…

…

…

Two weeks later…

Slightly less improvisational compiler-stage design

Okay. What am I actually doing here?

I want to be able to specify programs as data structures in memory. I want this so I can design programming interfaces that construct those data structures. At the moment I want this so that I can implement my shadow DSL, but that’s not the long term goal. I don’t really care about the shadow language; it’s an experiment for a silly blog post. But I believe I’ll be making more complicated things later, and when I do so I’ll be using this AST framework. Honestly what I’m probably going to do with the AST framework before too long is just plug LPEG into it, at which point— despite my protestations— I will have literally written a compiler.

At the moment, when you "render" this AST into a program, it does so using LLVM. But I could imagine, at some point, making a version of the AST framework that uses the same interface but generates (for example) Javascript instead of LLVM IR. (You can generate Javascript from LLVM IR, but it’s pretty ugly Javascript since LLVM IR’s model is simpler than Javascript is.) So it would be good to design this so it can easily be adapted to support code generation models that don’t look like LLVM’s.

At the moment the design doesn’t actually support even LLVM. Let’s talk for a moment about what LLVM does.

When I call one of those LLVM build functions:

local two = llvm.constInt(int32Type, 2, false) local three = llvm.constInt(int32Type, 3, false) builder.buildAdd(two, three, "sum")

When I call builder.buildAdd, executing that call has a side effect. It’s writing a line of LLVM assembly into a ledger somewhere:

%sum = add i32 2, i32 3

Sometimes I use a value that a build function returns:

local value = builder.buildAdd(two, three, "sum") builder.buildRet(value)

What does buildAdd return? Well, it returns %sum from the assembly snippet. This last bit of Lua is telling the builder to write in its ledger:

%sum = add i32 2, i32 3 ret i32 %sum

Since LLVM calls have side effects, it matters when you call LLVM functions. There are some things order matters for and some it doesn’t; you can construct a type or a function at any time, for example. LLVMConstInt likewise does not care about order. But it does matter the order you create blocks in, since the first block added to a function is always the entry. And it matters the order you build expressions/instructions in, because when you build an instruction its "name" gets added to the current block and if you write your instruction into the ledger for the wrong block you might not be able to reference it later.

So this means my first-attempt design for AstBase, where ast objects render their contents exactly once the first time you try to read them, isn’t very good. On my second attempt, render gets replaced with three functions: register(), render(), and emit().

In my second-attempt design, compiling happens in two passes. First, every object in the entire AST gets a register() call. This allows the AST to inspect itself and make sure things are correct, and if anything the AST does is time-sensitive it can use register() as an opportunity to note that down, so that in the second pass it knows to do things in the correct order. On the second pass, each object gets called with exactly one of emit() or render(). emit() and render() are actually the same thing, but render() is for things which return a value after emitting themselves and emit() is for things that don’t. When you emit() a module, there’s no point in returning the LLVM module object, but when you emit an add instruction you will tend to immediately need the LLVM object it returns.

The two passes are managed by the AstModule object, which is the top-level entry to everything else. It doesn’t have a register phase; to create a program you create an AstModule object and call module:emit(). AstModule‘s emit looks like:

-- Register all functions for k,v in pairs(self.namedFns) do v.name = k v:register() end -- Emit all functions for _,v in pairs(self.namedFns) do v:emit() end -- Process backend:verify() for _,v in pairs(self.namedFns) do backend:optimize(v.llvmObj) end -- Write backend:debugDump() backend:emitToFile()

What happens in the register phase? Well, functions register themselves with the module; function bodies build a list of their blocks and a list of their local variables; and expressions notify their function bodies which blocks and variables they use. Every leaf in the AST gets visited once while this is happening. If any particular node has references to other AstBase objects, then when it is register()ed it needs to call register() on those as well.

Here a bit of a problem comes up, and it requires me to make one more tweak to my model. If an AstExpr has to notify its AstBody about variables it uses, that means it needs to be able to find its AstBody. Actually, potentially, an expr might need to be able to get references to any one of (1) its module, (2) its function, (3) its body or (4) its block. I briefly consider passing all four of these down as arguments to every render() call, or maybe having each AstExpr keep a reference to all four. Both of these options sound miserable. I go with something that might not be as good software engineering, but works: I track a global "current" module, function, body and block. I give AstBase a function:

function AstBase:recur(label, fn) local prev = backend[label] -- Allow nesting backend[label] = self fn() backend[label] = prev end

The generation process for modules and blocks nests nicely— each expression belongs to at most one block, each block belongs to at most one function, etc. When we call register() on an AstBody, we’ll be working with that body until the function completes. So we can use the shared global backend object to track the fact of, for example, which body we are currently working on. The "recur" function takes a function to execute, and is responsible for setting the tracked field before execution and then unsetting it afterward. (It saves and re-sets "prev" rather than just writing to nil at the end because later on I figured out that sometimes you might have to interrupt processing one block to look at another and then come back.) So for example an AstBody could call:

self:recur('currentBody', function() -- Any external functions called in this stretch of code -- will be able to find self at backend.currentBody end)

Armed with these two tweaks— the two-pass tree traversal, and the ‘current’ tracking— my model works much better. There’s just one more idea I need to introduce, and then I’ll be able to show you the code. If you look at the AST sample above, you’ll notice I refer to variables and blocks by name. In practice once I start writing the render() functions it turns out to be a little bit awkward to look things up by name every time you use them. Also, it would be sort of cool if when I’m writing the AST structures I could "inline" small anonymous blocks. For example, toward the end of the full version of my sample AST I wound up writing:

AstExpr{op='branch', -- Condition: Verify that argc, the first argument to main(), is equal to 2 cond=AstExpr{op='bin', op2='==', value={ AstExpr{op='getParam',idx=0}, AstExpr{op='literal',value=2} }}, -- Condition succeed block y=AstBlock{exprs={ -- [[Snip]] -- Here I call printFactors, print a newline, and return 0 }}, -- Condition fail block n=AstBlock{exprs={ AstExpr{op='call', fn='printf', args={ AstExpr{op='literal',value="Please enter exactly one number as argument.\n"} }}, AstExpr{op='return', value=AstExpr{op='literal', value=1}} }} }

Instead of giving names to the blocks to the y and n branches of this branch statement, I just stuck AstBlock objects in there.

What I decide is that by the time emit()/render() is called, all "by name" references should be converted to plain object references— block names to AstBlocks, var names to a new AstVar structure. So for example later on when I implement register() for the branch-type AstExpr, it’s going to say something like:

for _,k in ipairs({"y", "n"}) do if self.spec[k] then self.spec[k] = backend.currentBody:registerBlock(self.spec[k]) end end

registerBlock() will be a "sanitizer" function that takes either an AstBlock or a name; if it’s an AstBlock it will just return it, if it’s a name it will look up the AstBlock for that name and return it. AstExpr:register() is expected to sweep through and replace any block references the expr has with references to whatever registerBlock() returns.

Now, at this point some of you might point out that once I perform this operation, the "AST" is no longer properly a "tree" because the references can reconverge and potentially even loop. WELL MAYBE IT IS SOME KIND OF FREAKY MUTANT LARRY NIVEN SPACE TREE AND YOU SHOULD JUST DEAL.

ast.lua

I am now finally ready to write some code. First off, here’s my AstBase class:

-- Base class for all AST objects class.AstBase() function AstBase:_init(spec) self.spec = spec end function AstBase:llvm() -- Generate then return llvmObj if not self.llvmObj then self.llvmObj = self:render() end return self.llvmObj end function AstBase:render() -- Some objects do not support llvm() error("Could not render this object. You may need to call emit() first") end function AstBase:recur(label, fn) -- Temporarily register with backend and run function local prev = backend[label] -- Allow nesting backend[label] = self fn() backend[label] = prev end

All this code has already appeared in this post— the only new thing is the error-throwing default implementation for render(). Next comes the first of my actual AST object classes:

-- Representation for a module (ie, an object file). Call emit() to create program class.AstModule(AstBase) function AstModule:_init(spec) self:super(spec) self.namedFns = self.spec.namedFns or {} self.spec.namedFns = nil end function AstModule:recur(fn) AstBase.recur(self, 'currentModule', fn) end

I give the individual classes personalized versions of recur() which have their "name" baked in. Here’s AstModule:emit() again, in its final form:

function AstModule:emit() -- Set a global for the duration of this function if backend then error("AstModule:emit called, but backend already exists") end backend = Backend("main") self:recur(function() -- Register all functions for k,v in pairs(self.namedFns) do v.name = k v:register() end -- Emit all functions for _,v in pairs(self.namedFns) do v:emit() end -- Process backend:verify() for _,v in pairs(self.namedFns) do backend:optimize(v.llvmObj) end -- Write backend:debugDump() backend:emitToFile() end) self.backend = backend backend = nil end

Where most of the functions are only responsible for registering themselves with the global backend object, AstModule has to register the backend object itself. One more thing and then we’re done with AstModule:

-- Lookup/sanitization for functions function AstModule:fn(name) -- Note it is assumed ALL modules are registered manually before emission starts if type(name) == 'string' then return self.namedFns[name] else return name -- Assume this is a function object already end end

This is one of those "sanitizer" functions I mentioned, since the module object is responsible for managing functions.

AstFn is pretty simple:

-- Representation for a function class.AstFn(AstBase) function AstFn:_init(spec) self:super(spec) end function AstFn:recur(fn) AstBase.recur(self, 'currentFn', fn) end function AstFn:register() if not self.llvmObj then self.llvmObj = backend.module.addFunction(self.name, self:type()) if self.spec.body then self:recur(function() self.spec.body:register() end) end end end function AstFn:emit() if self.spec.body then self:recur(function() self.spec.body:emit() end) end end

Other than registering the function with the module during register(), AstFn pretty much just defers to its body object. The only real complexity is in the helper that calculates the function’s type in LLVM terms:

-- Fetch the LLVMFunctionType for this function function AstFn:type() if not self.llvmType then local args = {} for i,v in ipairs(self.spec.args or {}) do table.insert(args, v:llvm()) end self.llvmType = llvm.functionType(self.spec.ret and self.spec.ret:llvm() or llvm.voidType(), typeArray(args), #args, self.spec.vararg and true or false) end return self.llvmType end

ret and args are AstType objects, so we need to call llvm() on them to get the representations LLVM consumes. Moving on to AstBody:

-- Representation for a function body class.AstBody(AstBase) function AstBody:_init(spec) self:super(spec) self.spec.namedBlocks = self.spec.namedBlocks or {} self.anonBlocks = {} self.namedVars = {} end function AstBody:recur(fn) AstBase.recur(self, 'currentBody', fn) end

Notice the "or" statement specifying a default for namedBlocks if one isn’t in the spec already. (This implies a slightly wierd wrinkle on my convention of storing some fields inside of "spec"; the AST objects can mutate the spec objects, so you’d better not pass a single spec structure to multiple constructors.)

function AstBody:register() self:recur(function() self.llvmAllocaBlock = llvm.appendBasicBlock(backend.currentFn.llvmObj, "") for k,v in pairs(self.spec.namedBlocks) do v.name = k v:register() end if not self.spec.entry then error("Block without entry") end self.entry = self:registerBlock(self.spec.entry) end) end

The body’s register() phase is mainly concerned with its blocks. Notice two blocks are special; the "alloca block" is the entry block where I register variables, so it must be registered with the function before anything else happens. The entry block is the block the alloca block transfers control to when it’s done. entry is mandatory for an AstBody spec, but it can be either a block object or a name, so registerBlock() is used.

-- Lookup/sanitization for a block during register phase. Accepts either a name or a block object. function AstBody:registerBlock(b) if type(b) == 'string' then return self.spec.namedBlocks[b] end b:register() return b end -- Lookup/sanitization for a var during register phase. Accepts either a name or a var object. function AstBody:registerVar(v, create) if type(v) == 'string' then if self.namedVars[v] then v = self.namedVars[v] else local name = v v = AstVar{} v.name = name end end if create then v.valid = true end v:register() return v end

Here’s those sanitize functions I mentioned. Two things to notice: The work of actually registering is passed off to the register implementations for AstBlock and AstVar; also, registerVar takes a "create" argument which discriminates whether we are writing to or reading from a function. If a variable is ever read from in a function where it’s never written to, I’ll want that to be an error.

function AstBody:emit() self:recur(function() -- Instantiate vars backend.builder.positionBuilderAtEnd(self.llvmAllocaBlock) local function alloca(v) v.llvmObj = backend.builder.buildAlloca(int32Type, v.name or "anon") end for _,v in pairs(self.namedVars) do alloca(v) end for _,v in ipairs(self.anonVars) do alloca(v) end backend.builder.buildBr(self.entry.llvmObj) -- Run blocks for k,v in pairs(self.spec.namedBlocks) do v:emit() end for _,v in ipairs(self.anonBlocks) do v:emit() end end) end

In its emission phase, a body generates the "alloca" block itself— again consisting only of the alloca instructions that reserve room for its variables on the stack, followed by a jump into the entry block— and then directs its blocks to emit themselves in no particular order. Notice that with both vars and blocks, there’s a split between "named" and "anonymous" items; "anonymous" blocks or vars wind up being those AstBlocks or AstVars that just sort of get discovered while traversing the AST without ever being assigned to a name. You can see this in action in AstBlock:register():

-- Representation for a block within a function class.AstBlock(AstBase) function AstBlock:_init(spec) self:super(spec) self.spec.exprs = self.spec.exprs or {} end function AstBlock:register() if not self.llvmObj then if not self.name then table.insert(backend.currentBody.anonBlocks, self) end self.llvmObj = llvm.appendBasicBlock(backend.currentFn.llvmObj, self.name or "anon") for _,v in ipairs(self.spec.exprs) do v:register() end if self.spec.exit then self.exit = backend.currentBody:registerBlock(self.spec.exit) end end end

Named blocks are already present in namedBlocks, in the AstBody‘s spec, but blocks without names are apparently being found somewhere in the tree and need to be added to anonBlocks when they’re discovered. Notice something a little bit subtle here: since blocks can point at each other through AstExprs that branch, register() on a single block might get called more than once, so the method has to detect it’s already been run and silently return in that case. Once again, technically my "Abstract Syntax Tree" is more of a directed graph and the register() process is doing a DFS on that graph.

function AstBlock:emit() backend.builder.positionBuilderAtEnd(self.llvmObj) for i,v in ipairs(self.spec.exprs) do v:llvm() end if self.exit then backend.builder.buildBr(self.exit.llvmObj) end end

Emission phase for a block is simple. The expressions (instructions) get rendered, and then as a convenience the AstBlock emits the jump out itself.

AstType and AstVar are also simple:

-- Representation for a variable type class.AstType(AstBase) function AstType:_init(spec) self:super(spec) end function AstType:render() if self.spec.literal then return self.spec.literal end if self.spec.ptr then return llvm.pointerType(self.spec.ptr:llvm(), 0) end return llvm.voidType() end -- Representation for a variable within a function class.AstVar(AstBase) function AstType:_init(spec) self:super(spec) end function AstVar:register() if self.registered then return end self.registered = true if self.name then backend.currentBody.namedVars[self.name] = self else table.insert(backend.currentBody.anonVars, self) end end

AstType I’ve actually inlined in this post already, and AstVar is pretty much the same registration behavior as AstBlock (with the difference that the variable names aren’t already known at the time the register phase starts).

The final class, AstExpr, is the most complicated one by far. Here’s its register phase:

-- Representation for an expression or statement within a function class.AstExpr(AstBase) function AstExpr:_init(spec) self:super(spec) end function AstExpr:register() if self.registered then return end self.registered = true local function registerValue() self.spec.value:register() end local switch = { bin = function() self.spec.value[1]:register() self.spec.value[2]:register() end, ['return'] = function() if self.spec.value then registerValue() end end, store = function() self.var = backend.currentBody:registerVar(self.spec.var, true) registerValue() end, load = function() self.var = backend.currentBody:registerVar(self.spec.var) end, deref = function() registerValue() if self.spec.idx then self.spec.idx:register() end end, branch = function() if self.spec.cond then self.spec.cond:register() end for _,k in ipairs({"y", "n"}) do if self.spec[k] then self.spec[k] = backend.currentBody:registerBlock(self.spec[k]) end end if not self.spec.y then error("Branch lacks a destination") end if self.spec.cond and not self.spec.n then error("Conditional branch lacks a \"no\"") end end, call = function() for _,v in ipairs(self.spec.args) do v:register() end end } local fn = switch[self.spec.op] if fn then fn() end end

AstExpr is complicated because it’s actually a bunch of different things. It has an op field which indicates what, exactly, this expression is doing. Someone more committed to OOP than me might have just made a different AstExpr for each op, but I just use a switch statement. Lua doesn’t have switch statements. I fake it with a table full of closures.

Other than a tiny amount of error checking, the register phase for an AstExpr is concerned only with furthering the DFS on the directed graph that is the "AST". Expressions, depending on what their op is, might reference a number of other AstExprs, AstVars or AstBlocks. Each of those needs to get their register() called.

AstExpr‘s render() is another big switch statement, so let’s take it a little bit at a time.

function AstExpr:render() local function literal(v) local t = type(v) if t == 'number' then -- Accept unwrapped numbers and strings return llvm.constInt(int32Type, v, false) elseif t == 'string' then return backend.builder.buildGlobalStringPtr(v, "") else -- Otherwise assume we've been passed an LLVM object return v end end local switch = { -- Lookup table on op literal = function() return literal(self.spec.value) end,

The literal op, used to represent a inlined value in the AST, has its own little helper function. I don’t think I had a particularly good reason why.

bin = function() -- Binary expression local a = self.spec.value[1]:llvm() local b = self.spec.value[2]:llvm() local function build(kind) return function() return backend.builder['build'..capitalize(kind)](a, b, "") end end local function iCmp(kind) return function() return backend.builder.buildICmp(llvm[kind], a, b, "") end end local switch2 = { ['>'] = iCmp('intSGT'), ['<'] = iCmp('intSLT'), ['>='] = iCmp('intSGE'), ['<='] = iCmp('intSLE'), ['=='] = iCmp('intEQ'), ['!='] = iCmp('intNE'), ['+'] = build('add'), ['-'] = build('sub'), ['*'] = build('mul'), ['/'] = build('sDiv'), ['%'] = build('sRem') } return switch2[self.spec.op2]() end,

bin has an op2 and its own inner switch. A fun thing about building a switch from a table of functions is that I can build the switch cases themselves using higher-order functions! This results in an incredibly clean looking switch statement at the cost of two nearly inscrutable helper functions. FUNCTIONAL PROGRAMMING.

Most of the ops there’s not much to say about— here, at last, I’m only doing a straightforward conversion from spec members to LLVM builder calls—

['return'] = function() if self.spec.value then local value = self.spec.value:llvm() backend.builder.buildRet(value) else return backend.builder.buildRetVoid() end end, getParam = function() return llvm.getParam(backend.currentFn.llvmObj, self.spec.idx) end, call = function() if not self.spec.fn then error("Function not found "..self.spec.fn) end local llvmArgs = {} -- map :llvm() on self.spec.args for _,v in ipairs(self.spec.args) do table.insert(llvmArgs, v:llvm()) end local fn = backend.currentModule:fn(self.spec.fn) return backend.builder.buildCall(fn.llvmObj, valueArray(llvmArgs), #llvmArgs, "") end,

—but a couple things stand out.

store, load and branch wind up accessing the .llvmObj of the blocks and variables they reference directly, since blocks and variables don’t have a render() and are required to have been constructed before the code reaches this point:

store = function() return backend.builder.buildStore( self.spec.value:llvm(), self.var.llvmObj ) end, load = function() return backend.builder.buildLoad( self.var.llvmObj, "" ) end, branch = function() if self.spec.cond then return backend.builder.buildCondBr(self.spec.cond:llvm(), self.spec.y.llvmObj, self.spec.n.llvmObj) else return backend.builder.buildBr(self.spec.y.llvmObj) end end,

Finally, deref combines the slightly confusing LLVM getelementptr instruction and a load into a single operation, which is slightly more apt for my uses:

deref = function() -- TODO: Allow idx to be a list local ptr = self.spec.value:llvm() if self.spec.idx then ptr = backend.builder.buildGEP(ptr, valueArray {self.spec.idx:llvm()}, 1, "") end return backend.builder.buildLoad(ptr, "") end } return switch[self.spec.op]() end

…and that’s it! I run my printFactors-as-an-AST sample file:

luajit tests/printFactorsAst.lua && cc -o main main.o && ./main 543

And the result is 3 181.

It works! The AST version of the printFactors program is a little shorter than the version where I write out all the LLVM-C calls by hand (144 lines vs. 99), but it requires this 370 line AST library so maybe that’s a bit of a wash. Whatever. Here’s the code so far.

This two-week detour completed, I finally get to start on the shadow language.

shadow.lua

The DSL framework’s external interface will be two functions: shadowFn, which returns an AstFn, and shadowModule, which returns an AstModule. This means I could potentially mix functions defined with shadowFn into a module defined with the AST interface and vice versa.

shadowModule takes the name of the module, and the "construction function" for the module. Remember, a "construction function" is where the shadow language is written— it’s a function with no arguments which I’m planning to inject the shadow-language symbols into. shadowModule winds up being pretty simple. All it has to do is build the list of injected symbols and then call the construction function:

-- Create an AstModule given a name and a construction function function shadowModule(name, gen) local module = AstModule{name=name} if gen then local env = { fn = emptyWithMetatable { __newindex = function(table, key, value) module.namedFns[key] = value end, __call = function(func, ...) return shadowFunction(...) end }, type = shadowType } execWithEnv(gen, env) end return module end

…and at the stage where you’re only defining modules, it doesn’t have much to inject. The only injected symbols in the module construction function are fn and type. Let’s consider fn first. You can only do two things with fn: assign to it to define a new function (__newindex), or call it to make an AstFn (__call). Since fn has the same interface as shadowFn I can just re-call shadowFn, and since the return value of shadowFn is already an AstFn I can just stick the return directly into the AstModule I’m building. type I’ll come back to in a moment.

execWithEnv isn’t something that exists in Lua, I had to build it:

-- Takes a function and a table, and executes fn with env as its _G. -- Resets everything at completion, but does not use env's metatable during execution. function execWithEnv(fn, env) local envOldMetatable = getmetatable(env) local fnOldEnv = getfenv(fn) -- Make fn's original env available as a parent scope local envMetatable = {__index=fnOldEnv} if (envOldMetatable) then setmetatable(env, nil) end -- Double-setting metatable not allowed setmetatable(env, envMetatable) -- Run fn setfenv(fn, env) fn() -- Restore original state setfenv(fn, fnOldEnv) setmetatable(env, envOldMetatable) end

setfenv is a pretty blunt instrument; it doesn’t preserve the original scope of the function you’re mutating, and the effects on the function it acts on are permanent. execWithEnv is a bit easier to work with, but making it that way takes some work.

Anyway, type. This is where things start to get interesting. Wiring the fn container in the module construction scope was simple because its inputs and outputs were just the AstFn objects from earlier. But the members of type are going to be shadow objects— magical semantic things loaded up with however much metatable trickery I need to get the behavior I want in my DSL.

What’s the behavior I want? Looking back at the original "printFactors" sample, I fetched some int types (int8 and int32) from this type container, and I used a .ptr field on the returned types to get pointer versions. So the only special behavior I need on a type object is that .ptr field:

-- Convert AstType to shadow type local function shadowTypeMake(v) local shadowTypeMetatable = {__index=cacheAndReturn(function(type, key) if key == 'ptr' then return shadowTypeMake(AstType{ptr=type.__astObject}) end return nil end)} local t = {__astObject=v} setmetatable(t, shadowTypeMetatable) return t end

A single shadow type has its AstType hidden inside as __astObject, and the ptr field is wired up to create and cache the pointer version on demand. So that’s a type object; now I need to make the type container.

-- Dispenser for shadow types, currently contains only int[number]s shadowType = { fromAst = shadowTypeMake, vararg = {} -- Arbitrary object for comparing by reference }

(shadowType.vararg is what you pass in as the final argument type if you have a function, like printf, which uses C vararg.)

shadowType has these two fixed members, and then a few more members are generated by the metatable:

setmetatable(shadowType, {__index=cacheAndReturn(function(_, k) local llvmType = nil -- Automatically look up int constructors in LLVM headers local _,_,intmatch = string.find(k, '^int(%d+)$') -- # of bits if intmatch then llvmType = llvm['int'..intmatch..'Type']() end if llvmType then return shadowTypeMake(AstType{literal=llvmType}) end return nil end)})

This is completely gratuitous. I need to make available six int types (1, 8, 16, 32, 64 and 128 bits); I could have just taken six lines to populate six members of shadowType, but instead I do eight lines of metaprogramming to make those six objects be generated on demand. Look, I write C all day at work. Let me have my fun.

Modules and types out of the way, it’s time to take on functions. A first pass at shadowFunction could look something like this:

-- Create an AstFn given a return shadow type, a list of arg shadow types, and a construction function function shadowFunction(ret, args, gen) local astArgs = {} local vararg = false for _,v in ipairs(args) do if shadowType.vararg == v then -- Not a real argument, just denotes this fn is vararg vararg = true else table.insert(astArgs, v.__astObject) end end local fn = AstFn{ret=ret.__astObject, args=astArgs, vararg=vararg} if gen then -- TODO: RUN CONSTRUCTION FUNCTION end return fn end

In this first pass I don’t actually call the construction function or create a function body— I just calculate the fn’s argument and return types and register the function as existing. (The types are accepted here as shadow objects, so "calculating" the types just means fetching the .__astObject fields out of the shadow types, then checking to see if the argument list ends with the magic vararg value.)

With exactly this code I’ve got so far and without filling in that TODO, I’ve got code that can generate a module, generate a function with no body, and generate types. This is actually enough to do a test (even a test that executes, since I can inject functions with bodies if I use AstFn). I decide to set a simple test up:

require("lib-lua/simple") require("lib-lua/practice/shadow") shadowModule("main", function() fn.printf = fn(type.int32, {type.int8.ptr, type.vararg}) fn.atoi = fn(type.int32, {type.int8.ptr}) end):emit()

And this gives me a working module!

; ModuleID = 'main' declare i32 @printf(i8*, ...) declare i32 @atoi(i8*)

…or, well, it gives me a working module once I fix five or eight typos, learn that if you don’t return nil at the end of a metatable __index function the default behavior is not what you expect, and then realize that I forgot to implement the vararg feature and go back and rewrite the last few paragraphs of this post to include it. Anyway! I’ve proven the shadow-language concept works.

Final lap

No more scaffolding. On the first post in this series, I daydreamed about writing a DSL in idiomatic Lua; now all I have to do to get that working is implement that TODO: RUN CONSTRUCTION FUNCTION from the last code stretch.

I decide to implement this one feature at a time, and write a small test for each feature I add. The simplest function body I could support here is:

require("lib-lua/simple") require("lib-lua/practice/shadow") shadowModule("main", function() fn.main = fn(int32, {type.int32, type.int8.ptr.ptr}, function() go._return(3) end) end):emit()

This snippet is a little different from the syntax I was using before. Something that didn’t occur to me when I wrote the original "printFactors" sample is that do, while, if and return are pure reserved words in Lua— they’re not even legal names for fields. To work around this I renamed do to go, and for while/if/return, I… couldn’t decide what to do, so I tacked on underscores for now.

TODO: RUN CONSTRUCTION FUNCTION now becomes:

local function nextBlock() return AstBlock{exprs={}} end local block = nextBlock() fn.spec.body = AstBody{entry = block} -- Given a Lua value, return an AstExpr local function literal(v) return AstExpr{op='literal', value=v} end -- Given either a Lua value or an AstExpr, return an AstExpr local function literalFilter(v) if astLiteralAllowed(v) then return literal(v) else return v end end execWithEnv(gen, { go = { _return = function(v) table.insert(block.spec.exprs, AstExpr{op='return', value=literalFilter(v)}) end } })

When a construction function is present, I create a body and a block for the fn being built, and then I run the construction function in execWithEnv like I did with modules. Right now the only thing I inject in the construction environment for fns is go, and all it needs to contain is _return. All _return does when you invoke it— all basically any line of "code" in the construction functions is going to do— is append an AstExpr object representing some instruction (in this case return) to the current block.

The literalFilter() helper is something I need because things lke _return() consume AST objects, but the argument I pass in might instead be a native Lua value like "3". I need to figure out what I have and convert it to an AST, and I delegate the task of figuring out what I have to a new function in ast.lua:

function astLiteralAllowed(v) local t = type(v) return t == 'number' or t == 'string' or t == 'cdata' end

I assume native Lua values are numbers, strings, or LLVM objects (which are easy to identify because Lua knows they came from C).

This simple test program doesn’t output anything, but I can treat its UNIX return value as "output" and verify it works:

$ (luajit tests/returnValueShadow.lua && cc -o main main.o && ./main) 2> /dev/null $ echo $? 3

My next test program, I add in variables:

shadowModule("main", function() fn.main = fn(type.int32, {type.int32, type.int8.ptr.ptr}, function() var.x = 5 var.x = 6 go._return(var.x) end) end):emit()

To make this test work this I inject var into the construction environment:

var = emptyWithMetatable({ __index = function(_, key) return AstExpr{op='load', var=key} end, __newindex = function(_, key, value) table.insert(block.spec.exprs, AstExpr{op='store', var=key, value=literalFilter(value)}) end }),

When you write a value into var (__newindex) it writes a store instruction as an AstExpr to the end of the current block’s expr list; when you read a value it writes nothing to the expr list, but returns a load AST that a different, value-writing function (in this case _return) might use.

So far pretty simple. In the next test I add in arithmetic:

shadowModule("main", function() fn.main = fn(type.int32, {type.int32, type.int8.ptr.ptr}, function() var.x = 5 var.y = var.x + 4 go._return(var.x + var.y) end) end):emit()

Suddenly things are less simple.

I’ve been vaguely toying with this idea of "shadow" versions of values— for example, the "shadow type" objects I created earlier— which if you remember are tables with a hidden __astObject inside, and a tricked-out metatable to allow manipulating that AST object with Lua syntax. For + to work on the value returned by var.x, it’s going to need to be a shadow value. I’ll create that shadow value with shadowExprMake():

-- Convert AstExpr to shadow expr local function shadowExprMake(v) local function binop(op2) return function(l, r) return shadowExprMake( AstExpr{op='bin', op2=op2, value={literalFilter(l), literalFilter(r)}} ) end end local shadowExprMetatable = { __add = binop('+'), __sub = binop('-'), __mul = binop('*'), __div = binop('/'), __mod = binop('%'), __eq = binop('=='), __lt = binop('<'), __le = binop('<=') } local t = {__astObject=v} setmetatable(t, shadowExprMetatable) return t end

Do you follow all the levels of indirection involved here? Calling shadowExprMake() constructs a metatable where __add is a function created by a higher order function binop(). When that __add function is invoked, it will "recursively" call shadowExprMake() to create a new shadow object which corresponds to whatever performing the + operation on the targets would logically result in. The code is at once compact, elegant and extremely difficult to follow!

I have to change the existing code for this to work: Before, _return, and the = operation on var, were expecting AST objects, and var.varname returned an AST object. But now I’m going to be working with shadow exprs instead of AstExprs directly, so I’m going to need to change var.varname to return shadow exprs:

__index = function(_, key) return shadowExprMake(AstExpr{op='load', var=key}) end

And I have to change literalFilter— which again, _return and operator-= on var filter everything they use through— to assume non-literal values are shadow exprs:

-- Given either a Lua value or shadow value, return an AstExpr local function filterToAst(v) if astLiteralAllowed(v) then return literal(v) else return v.__astObject end end

I rename literalFilter while I’m at it to something a little less vague.

Next feature is argument parameters:

shadowModule("main", function() fn.main = fn(type.int32, {type.int32, type.int8.ptr.ptr}, function(argc,argv) var.x = argc -- IE number of command-line arguments passed in var.y = var.x + 4 go._return(var.x + var.y) end) end):emit()

If a function takes arguments, the construction function will be passed in shadow-exprs for those arguments as the arguments to the construction function itself. This is pretty easy to implement because my AST already has a construct for "fetch the Nth parameter to the current function". I can modify shadowFunction, before it invokes the construction function, to create a table with one such AST object for each argument:

local genArgs = {} for i=1,#args do table.insert(genArgs, shadowExprMake(AstExpr{op='getParam',idx=i-1})) end

Then I can pass that in to my execWithArgs function, where it gets unpacked into the construction function invocation using weird Lua magic:

function execWithEnv(fn, env, args) -- [snip] -- Run fn setfenv(fn, env) fn(unpack(args or {}))

Shadow language code can now accept arguments when it defines a function. Next step is to allow the code to call functions. The test program for that would be:

shadowModule("main", function() fn.atoi = fn(typ.int32, {typ.int8.ptr}) fn.main = fn(typ.int32, {typ.int32, typ.int8.ptr.ptr}, function(argc,argv) var.x = fn.atoi("3") go._return(var.x + 1) end) end):emit()

Somewhere at about this point I realized that one of the injected symbols in my shadow language is type, which isn’t a reserved word in Lua, but it is the name of a frightfully useful builtin function, so I renamed the type container to typ.

Anyway, to make this work I inject an fn into the construction environment:

fn = emptyWithMetatable({ __index = function(_, key) return emptyWithMetatable({ __call = function(_, ...) local args = {...} for i=1,#args do args[i] = filterToAst(args[i]) end return shadowExprMake(AstExpr{op='call', fn=key, args=args}) end }) end }),

I already injected an fn in the environment for module construction functions, but the fn for function construction functions (…ugh, did I just write that?) is separate and behaves differently. With the new fn you can look up a key on it and it returns a shadow object which will imitate a function you can call.

This fn implementation makes the test work, but it isn’t enough. As described above, I need two versions of fn: the expression form I just implemented, and a statement form (go.fn). Once again, this is because the expression form only creates an AST and does not actually add that AST to the current block.

To make this work I unpack the meat of my initial attempt at fn into a higher-order function:

local function makeFnInvoke(key) return function(...) local args = {...} for i=1,#args do args[i] = filterToAst(args[i]) end return shadowExprMake(AstExpr{op='call', fn=key, args=args}) end end

Once I’ve done this fn looks like:

_fn = emptyWithMetatable({ __index = function(_, key) return makeFnInvoke(key) end }),

In other words, makeFnInvoke takes a key and returns a callable entity that creates an AST for the given function using the args passed into it. I can also use this to make the go.fn:

fn = emptyWithMetatable({ __index = function(_, key) local fn = makeFnInvoke(key) return function(...) table.insert(block.spec.exprs, fn(...).__astObject) end end }),

fn(...).__astObject. I’m just going to sit here and savor that for a moment. …and also feel a bit guilty.

Functions are done. Next feature is indexing arrays. The test program for arrays runs its first command line argument through atoi and returns it as a UNIX status code:

shadowModule("main", function() fn.atoi = fn(typ.int32, {typ.int8.ptr}) fn.main = fn(typ.int32, {typ.int32, typ.int8.ptr.ptr}, function(argc,argv) var.x = fn.atoi( argv[1] ) go._return(var.x + 10) end) end):emit()

This, too, is very simple to implement. Most of these features have been very simple to implement. This one literally only requires three lines, a single field added to the shadow expr metatable:

__index = function(v, key) return shadowExprMake( AstExpr{op='deref', value=v.__astObject, idx=literalFilter(key)}) end

v is a shadow object; indexing it creates a shadow object that indexes the value inside it. Easy.

At about this point, things stop getting easy.

There’s only two features left to implement: if and while. These get interesting because they create not just values, but also blocks. Here’s my test for the if statement:

shadowModule("main", function() fn.printf = fn(typ.int32, {typ.int8.ptr, typ.vararg}) fn.main = fn(typ.int32, {typ.int32, typ.int8.ptr.ptr}, function(argc,argv) go._if(argc >= 3, function() go.fn.printf("Argc %d less than 3\n", argc) end, function() go.fn.printf("Argc %d greater or equal to 3\n", argc) end) go.fn.printf("Done\n") go._return(0) end) end):emit()

Up until now, there have only been construction functions in two places: Module construction when invoking shadowModule, and fn construction when invoking fn. But now we have construction functions nested inside the construction function of an single fn, constructing blocks within that fn.

Implementing that means a little bit of restructuring: For starters, I need to take my huge execWithEnv invocation I’ve been building and put it in a local function:

local function build(gen, genArgs) execWithEnv(gen, { -- All injected environment for an fn construction function goes here }, genArgs) end build(gen, genArgs)

This means if I’m inside one of the closures injected into the environment— for example, the one implementing _if— I will be able to recursively call build() from there if I want to invoke a construction function.

Before I can move on, I hit a really unpleasant oddity. I overloaded all of the normal operations you can perform on a table— +. -, >, ==. The idea of my overloads is that instead of performing whatever calculation the operator would normally describe, the overloaded version returns an AST describing the calculation. For + and - this works great. But the first time I try to run code using a comparison— the >= in the sample— it fails. The Lua interpreter claims that >= cannot be performed on a table.

It turns out, looking in the documentation, that comparison operators are different from other operators in Lua. First off, there are restrictions on when they even get invoked. There’s a semi-complicated algorithm Lua follows to decide whether to invoke a metatable comparison function and if so which one to invoke. One of the steps in the algorithm is that it only runs the comparison if the two tables have the same metatable. Here there’s an oddness. All of my shadow objects have the same metatable functionally. But they’re not the same in the VM memory, because I create a new copy of the metatable for every single shadow object I create. I rewrite that shadowExprMake so that only one metatable exists:

-- Go far out of way to define shadowExprMetatable outside closure so comparison operators work local function binop(op2) return function(l, r) return shadowExprMake( AstExpr{op='bin', op2=op2, value={filterToAst(l), filterToAst(r)}} ) end end local shadowExprMetatable = { -- Metatable members here } -- Convert AstType to shadow expr function shadowExprMake(v) local t = {__astObject=v} setmetatable(t, shadowExprMetatable) return t end

It still doesn’t work; my metatable comparison functions are now being invoked, but somehow they’re always evaluating to true. It turns out that apparently comparison operators in Lua coerce their return values to true and false. This doesn’t seem to be in the documentation. It’s just something that Lua and LuaJIT both do (a nice person on Twitter actually dug up the Lua 5 source code and confirmed this). I optimized my shadowExprMake() function for nothing!

Although the language forcing comparison operators to always return true or false probably fits most users’ expectations, it kind of kills my weird overloading idea. I’m forced to inject the comparison operators into the namespace as functions. The fn-construction context picks up a new symbol get:

get = { literal = filterToAst, lt = binop('<'), gt = binop('>'), lte = binop('<='), gte = binop('>=') },

With that cleared up, I’m free to go ahead and implement the go._if function. This one’s complicated, so I’m going to go over it a little bit at a time.

_if = function(cond, y, n) local branchExpr = AstExpr{op='branch', cond=literalFilter(cond)} table.insert(block.spec.exprs, branchExpr)

We’ve been working with an existing "block"; we want to append to its end a conditional branch instruction. The conditional branch instruction I defined here has a condition, but it’s missing its y and n blocks; I’m not done editing it.

local afterBlock = nextBlock() local yBlock = nextBlock()

This code creates a block for the code for the if’s "success" path, and a block for flow control to resume from after the if statement is completed.

block = yBlock build(y) block.spec.exit = afterBlock

Notice something a bit weird: build is a local function, closure. It makes reference throughout to a variable block which is local to the shadowFunction() invocation that build exists inside. This makes block effectively a bit of shared state for one full run through shadowFunction(). So in this code I set block to a new value immediately before calling build(y); this means the y construction function will write its expressions into the block I specified.

After running, the y-block dumps into the afterblock.

local nBlock = afterBlock if n then nBlock = nextBlock() block = nBlock build(n) block.spec.exit = afterBlock end

What’s happening in this code is that the "no" path is optional, so if the user specified an n construction function I want to branch to a new block for that, but if the user did not then a "no" result branches to the afterblock.

branchExpr.spec.y = yBlock branchExpr.spec.n = nBlock block = afterBlock end

I have now created both the y and n blocks, so I can add links to them in the original branchExpr. The last thing I do before the function ends is set the shared block to the afterblock, so any statements post-_if will be written into that.

So that’s go._if. The go._while implementation— which I do not bother writing a test case for— is much similar:

_while = function(cond, loop) local branchBlock = nextBlock() local loopBlock = nextBlock() local afterBlock = nextBlock() block.spec.exit = branchBlock local branchExpr = AstExpr{op='branch', cond=filterToAst(cond), y=loopBlock, n=afterBlock} table.insert(branchBlock.spec.exprs, branchExpr) block = loopBlock build(loop) block.spec.exit = branchBlock block = afterBlock end

This sets the current block to immediately enter into a "branch block"; the branch block contains only a conditional branch instruction, which jumps either to the loop body or to the block which follows the _while. Pretty straightforward.

I don’t write a test case for _while because at this point, I have enough features working together I can just use my original sample program— the printFactors program— as the test case! I pull it out again and try to run it. I run into three problems.

Problem #1: It’s ugly? It’s just. It’s really ugly. My attempts to avoid Lua keywords have left the syntax of the shadow language inconsistent. Some fields have underscores before them, some don’t. One symbol (typ) is misspelled rather than using an underscore, another switched to a slightly weird synonym (go). If I were writing this I’d never be able to remember what to use where.

I decide to change my symbol naming rules: If a field is "injected"— if it is part of the shadow language rather than being part of your computer program— it has an underscore before it. Period. This lets me avoid intentional misspelling, and it makes my syntax consistent. With the new rules, my "printFactors" program looks like this:

require("lib-lua/simple") require("lib-lua/practice/shadow") shadowModule("main", function() _fn.printf = _fn(_type.int32, {_type.int8.ptr, _type.vararg}) _fn.atoi = _fn(_type.int32, {_type.int8.ptr}) _fn.printFactors = _fn(nil, {_type.int32}, function(input) _var.value = input _var.divisor = 2 _do._while( _get._gte(_var.value, _var.divisor), function() _do._if( _get._eq(_var.value % _var.divisor, 0), function() _do._fn.printf("%d ", _var.divisor) _var.value = _var.value / _var.divisor end, function() _var.divisor = _var.divisor + 1 end) end) _do._fn.printf("\n") end) _fn.main = _fn(_type.int32, {_type.int32, _type.int8.ptr.ptr}, function(argc, argv) _do._if( _get._eq(argc, 2), function() _do._fn.printFactors( _fn.atoi( argv[1] ) ) _do._return(0) end, function() _do._fn.printf("Please enter exactly one number as argument\n") _do._return(1) end) end) end):emit()

So _var has an underscore, and both _do and _if (i.e. _do._if) have underscores, because those are things installed by the language. But the value in _var.value doesn’t have an underscore because that is a user variable, and the lack of the underscore helps you tell that, and this means that the underscore actually serves a purpose and isn’t just a dodge on Lua’s reserved words list. int32 in _type.int32 does not have an underscore and I don’t know if that’s consistent or not.

This is all… not terrible! The new syntax is not as nice as the version I put earlier in this post, but if it’s a prototyping language rather than something I’d use day to day maybe it’s okay.

Problems #2 and #3 come in the form of fatal errors from LLVM’s verifier (which I figured out how to turn on between posts two and three in this series) when I try to run "printFactors". Both errors claim that I failed to end a block correctly— LLVM requires every block to end with either a branch statement or a return statement. (If the verifier hadn’t stopped me, this would have resulted in a crash when I tried to emit an object file.) The two errors turn out to have happened for different reasons.

Problem #2 has to do with void functions. _fn.printFactors returns void, and in my sample program I don’t end the function with a _do._return() because any sensible language will do that implicitly for you. But I never implemented the part where it does it for you. I do that now.

I go back to ast.lua and add this helper function:

function AstBlock:unterminated() local exprCount = #self.spec.exprs if exprCount < 1 then return true end local finalOp = self.spec.exprs[exprCount].spec.op return not (finalOp == 'branch' or finalOp == 'return') end

Which returns true if the block fails to follow LLVM’s termination rules. All it does is check the final instruction’s opcode. Once I have this helper, I can implement automatic-return-for-void-functions easily by adding this to the end of shadowFunction():

if not ret and block:unterminated() then table.insert(block.spec.exprs, AstExpr({op='return'})) end

(To cover a couple of details: not ret in this context denotes "no return type"; and I only have to run this code once per function, not every block, because the rules that got us to this point will have already set spec.exit on every block except the final one.)

Problem #3 is a little more subtle than this, and is an accidental byproduct of the way I chain blocks in a do._if or do._while. In my sample, main() has a return type. It ends with a do._if, both branches of which end with an explicit return. But! When I run shadowFunction() to process all this, the do._if silently creates a block for the code following the do._if. But there is no code following the do._if, therefore nothing ever puts a return into the implicitly created block, therefore LLVM is unhappy.

This is, unambiguously, the fault of the shadow language code— it’s specifying an invalid block. However, wisely or unwisely, I decide to fix the problem in the AST code. The shadow language created a nonsensical situation here, but it created a detectably nonsensical situation.

The thing that’s weird about the blocks in the do._if is that they have spec.exit set (because do._if sets it unconditionally) but they also end with a do._return (because that’s what’s in the code). I decide to make it a rule that if a block ends with a branch or return, any redundant spec.exit is ignored. This literally requires nothing more than adding the words and self:unterminated() to the code in ast.lua that processes self.spec.exit:

if self.spec.exit and self:unterminated() then self.exit = backend.currentBody:registerBlock(self.spec.exit) end

Problem solved; if the redundant self.spec.exit is not processed, the useless extra block is never registered, which means it’s not in the function, which means LLVM never finds out about it and has nothing to complain about.

These last two errors fixed, I can build my program—

$ luajit generate.lua 2> main.asm && cc -o main main.o $ wc -l main.asm 63 main.asm $ ./main 1024 2 2 2 2 2 2 2 2 2 2

The generated assembly is 63 lines, whereas the printFactors program in the shadow language is 36 lines— for the first time in this project, my code to generate a program is shorter than the assembly it generates!— and the program works.Implementing the "shadow language" completely took only three days; the writeup of it you’ve just finished reading took over a week.